Accurate QoS require that the subscriber rates in the first mile on an MLPPPoX bundle be properly represented in the LNS. In other words, the rate limiting functions in the LNS must account for the last mile on-the-wire encapsulation overhead. The last mile encapsulation can be Ethernet or ATM.

For ATM in the last mile, the LNS accounts for the following per fragment overhead:

PID

MLPPP encapsulation header

ATM Fixed overhead (ATM encap + fixed AAL5 trailer)

48B boundary padding as part of AAL5 trailer

5B per each 48B of data in ATM cell

In case of Ethernet encapsulation in the last mile, the overhead is:

PID

MLPPP header per fragment

Ethernet Header + FCS per fragment

Preamble + IPG overhead per fragment

The encap-offset command in the sub-profile>egress CLI context is ignored in case of MLPPPoX. MLPPPoX rate calculation is, by default, always based on the last-mile wire overhead.

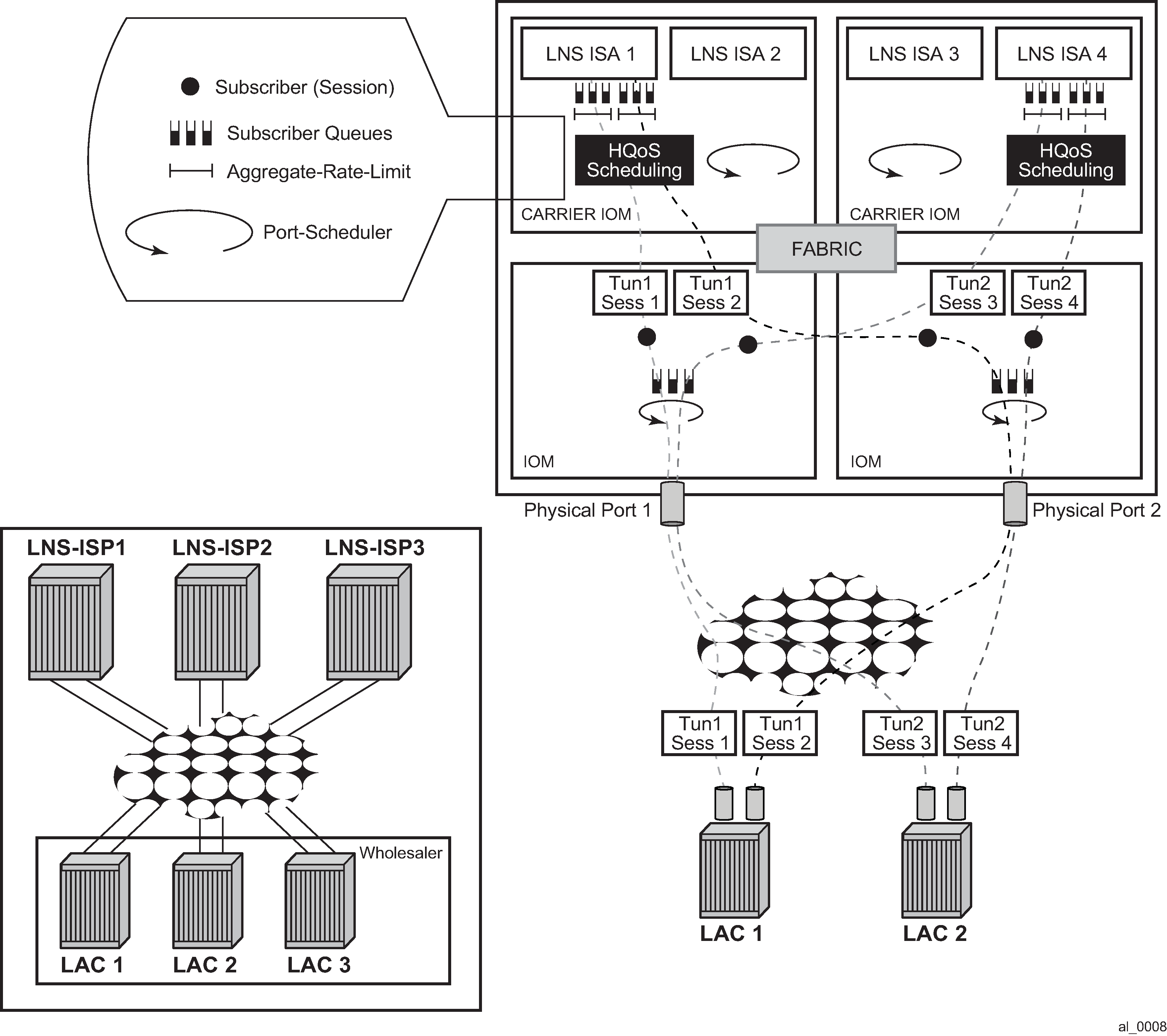

The HQoS rates (port-scheduler, aggregate-rate-limit, and scheduler) on LNS are based on the wire overhead of the entity to which the HQoS is applied. For example, if the port-scheduler is managing bandwidth on the link between the BB-ISA and the carrier IOM, then the rate of such scheduler accounts for the q-in-q Ethernet encapsulation on that link along with the preamble and inter packet gap (20B).

While virtual schedulers (attached by sub-profile) are supported on LNS for plain PPPoE sessions, they are not supported for MLPPPoX bundles. Only aggregate- rate-limit along with the port-scheduler can be used in MLPPPoX deployments.