An Extended Services Appliance (ESA) is a server that attaches to a host 7750 SR over standard SR system interface ports, and which has one to four Virtual Machine (VM) instances to perform multiservice processing. The ESA provides packet buffering and processing and is logically part of the router system. The ESA 100G includes a 24-core Intel Cascade Lake 6252 processor and 192 Gbytes of memory. The ESA 400G includes two 32-core Intel Ice Lake 6338N processors and 512 Gbytes of memory.

The ESA processing rate is the sum of the upstream and downstream rates (for example, 80 Gb/s up and 20 Gb/s down, or 50 Gb/s up and 50 Gb/s down).

The ESA 100G hardware can support up to 100 Gb/s of throughput processing, and the ESA 400G up to 400 Gb/s of processing. However, the maximum ESA ingress and egress throughput varies depending on the buffering and processing demands of a specific application.

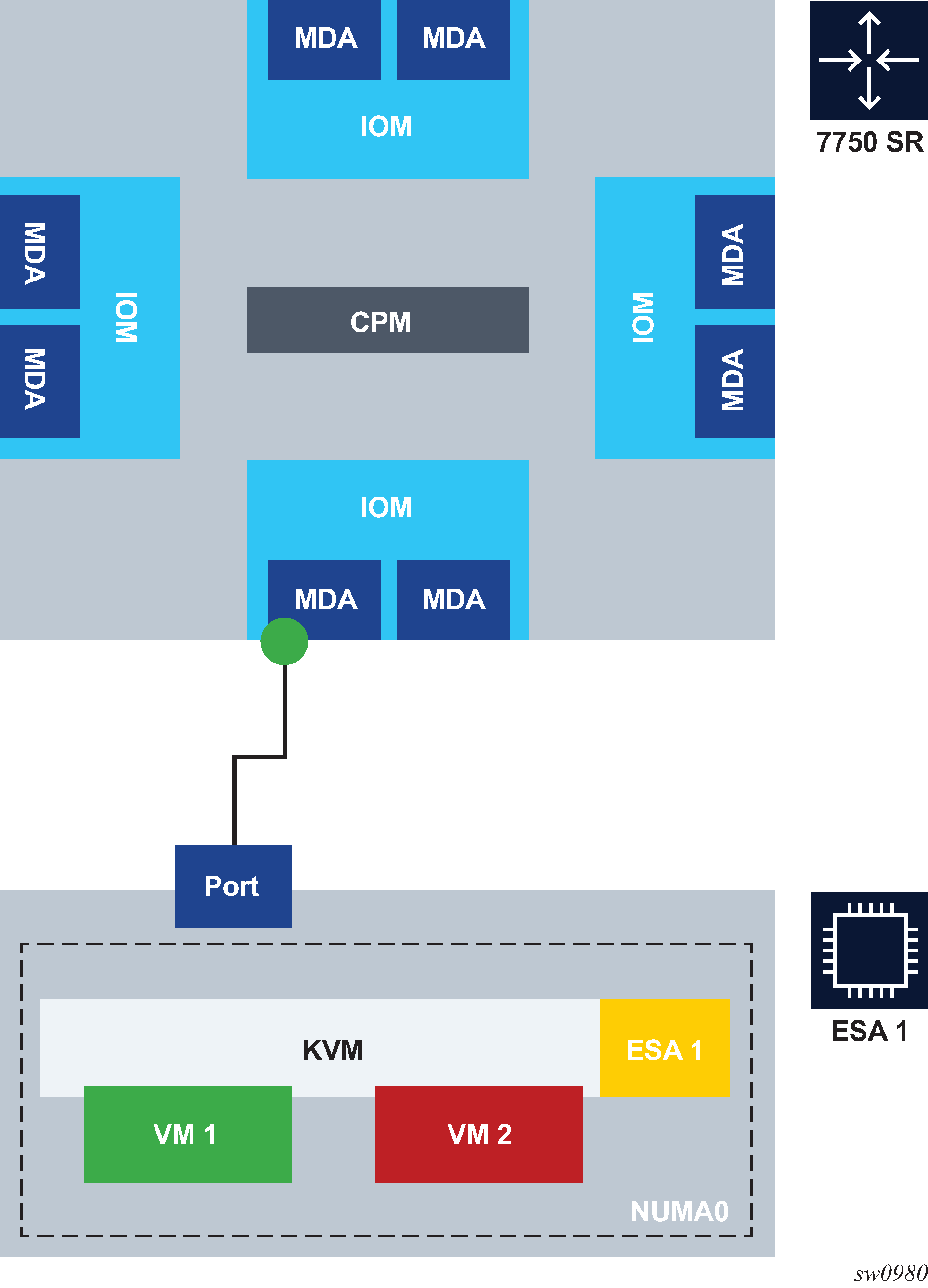

Figure: ESA connection to 7750 SR shows an ESA connected to a 7750 SR.

A direct local fiber connection must be used to connect an ESA port to a 7750 SR port. As with other MDAs and ISAs, all communication passes through the 7750 SR Input/Output Module (IOM), making use of the network processor complex on the host IOM for queuing and filtering functions. The ESA 100G includes a Mellanox Connect X5 2-port 100 Gb/s NIC with QSFP28 optics connectors. Either one or both of the ESA NIC ports can be used to connect to the 7750 SR within the maximum 100 Gb/s throughput per NIC.

The ESA 400G includes two Mellanox Connect X6 2-port 100 Gb/s NIC with QSFP28 optics connectors. Each NIC has a maximum 200 Gb/s throughput per NIC, and any of the four ESA NIC ports can be used to connect to the 7750 SR port.

The following SR to ESA port speeds are supported:

100GE (using QSFP28 optics in both the SR and ESA)

40GE (using QSFP+ optics in both the SR and ESA)

25GE (using a QSFP28 - SFP28/SFP+ Adapter and SFP28 optics in both SR and ESA)

10GE (using a QSFP28 - SFP28/SFP+ Adapter and SFP+ optics in both SR and ESA)

ESA 400G performance may be enhanced by configuring up to four ESA VMs for a single ESA across two CPUs. The two ESA NICs each connect to only one NUMA cell (CPU socket). For each ESA VM, reserve at least one port for SR interconnect. The most common ESA 400G deployment scenarios are as follows:

-

one port and one ESA VM

Use one port per NIC and one ESA VM per CPU socket for ESA 100G compatibility mode.

-

two ports and two ESA VMs

Use one port per NIC and one ESA VM per CPU socket to ensure maximum port and ESA VM performance.

-

four ports and four ESA VMs

Use two ports per NIC and two ESA VMs per CPU socket for maximum performance and density. However, because each CPU socket is shared by two VMs, the throughput for each VM is slightly less than when one VM is used.

Ports for an ESA may be from the same or from different IOMs, XMAs, or MDAs. Any combination of supported port speeds may be used on an ESA. If at least one host-port between the SR and the ESA is up, the ESA instance stays up.

An ESA-VM must be associated with one specific 7750 SR port. One physical 7750 SR port can be used by multiple VMs within an ESA. ESA-VMs may be configured as different types or the same type.

As each ESA-VM may only be associated with one 7750 SR port, LAG cannot be used between ports to an ESA. ESA-to-SR link resilience is handled by provisioning more VM instances than the processing requires (using the ISA group N+1 redundancy model). Functional sparing capacity is also handled by provisioning more VM instances than required.

Each ESA is managed by one 7750 SR. The ESA software (hypervisors.tim file, located on the active CPM from the 7750 SR host) can only be instantiated by a 7750 SR and cannot be instantiated in any other virtualized environment. Creation, configuration, deletion, resource allocation, and upgrade of a ESA-VM are all controlled by the 7750 SR CPM.

SR system LLDP must be enabled for ESA use, as LLDP is used to verify connectivity between the configured SR ESA host-ports and the matching configured ESA port for an ESA-VM. To set up an ESA in a 7750 SR system, complete the following actions in any order:

Install the ESA hardware in a rack, then apply power to the ESA hardware.

Connect the ESA hardware to a compatible 7750 SR chassis, IOM, or MDA using the appropriate optics.

From the 7750 SR, configure ESA host and ESA-VM ports; see Configuring an ESA with CLI.

See the 7750 SR ESA 100G Chassis Installation Guide for more information about the first two items in this list.

The ESA hardware is then booted by the 7750 SR CPM and available resources are discovered by the 7750 SR. ESA-VMs are configured as a type and size (number of cores and amount of memory). ESA-VM types include services that also run on ISAs, thereby providing a virtualized ISA function as an ESA-VM within the SR system and as part of an ISA group. An ISA group can only contain physical ISAs or ESA-VMs. Traffic for an ESA-VM enters the 7750 SR and is forwarded to the ESA-VM in a manner identical to that of a traditional ISA.

Multiple ESAs may be configured per IOM and per system as needed for scale.

ESA 100G provides CLI, SNMP, and YANG support for the following hardware monitoring states:

-

System health – OK or critical

-

PSU health – OK or critical

-

CPU temperature – degrees in Celsius

-

PSU temperature – degrees in Celsius

ESA 400G provides CLI, SNMP, and YANG support for the following hardware monitoring states:

-

ESA health – unknown, OK, degraded, or critical

-

PSU health – unknown, OK, degraded, or critical

-

Fan redundancy – unknown, redundant, non-redundant, or failed-redundant

-

Fan health – unknown, OK, degraded, or critical

-

Power supply mismatch – true or false

-

Power supply redundancy – unknown, redundant, non-redundant, or failed-redundant

-

Temperature health – unknown, OK, degraded, or critical

ESA hardware monitoring events and states are integrated into the SR OS system facility alarms.